Gr4-packet-modem complete flowgraph: Difference between revisions

No edit summary |

No edit summary |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

== Latency management == | == Latency management == | ||

A well-known problem in GNU Radio transmitter flowgraphs is latency. | A well-known problem in GNU Radio transmitter flowgraphs is latency. The following example illustrates the problem. Consider a flowgraph that contains a source block that produces output always except when backpressured by output buffer fullness. The source is connected through a series of blocks to a sink. Such flowgraph will typically have a large latency between the source and the sink, especially if the sink consumes data at a low sample rate. The reason is that when this flowgraph reaches a steady state, the buffers in all the blocks of the flowgraph become full, so the latency is at least the number of samples in all those buffers divided by the sample rate. A common workaround is to introduce some feedback between the source and the sink so as to limit the number of samples that can be "in flight" between them and prevent all those buffers from ever filling up. This feedback mechanism informs the source of how many samples the sink has consumed so far (or an equivalent metric) so that the source can know when to stop producing output even if its output buffer is not full. Matt Ettus explained this in more detail in his GRCon19 presentation [https://www.youtube.com/watch?v=jq0RewceCwc Managing Latency in Continuous GNU Radio Flowgraphs by Matt Ettus]. | ||

The same kind of latency management scheme is used in the gr4-packet-modem transmitter in order to have an acceptable latency between the TUN Source and the SDR sink. In addition, due to the particularities of the GNU Radio 4.0 runtime, some additional tricks need to be applied to control the latency. | The same kind of latency management scheme is used in the gr4-packet-modem transmitter in order to have an acceptable latency between the TUN Source and the SDR sink. In addition, due to the particularities of the GNU Radio 4.0 runtime, some additional tricks need to be applied to control the latency. The transmitter flowgraph of gr4-packet-modem looks like the example flowgraph used above to illustrate the problem of transmit latency. Even when operating in burst mode, the TUN Source might want to produce output always. This is the case if there is an application trying to send data through the TUN interface "as fast as possible", which for instance happens in practice with a TCP transfer, which relies NACKs from the other end to throttle down the rate at which data is sent. | ||

A potential problem with the implementation of the Packet Transmitter block is that as explained in [[gr4-packet-modem transmitter]], it uses <code>Pdu<T></code> objects (essentially a <code>std::vector<T></code>) to delimit packet boundaries because tag processing is too inefficient in GNU Radio 4.0. By default, the circular buffers connecting each block have 65336 elements. This buffer size could be changed, but at the moment there is not an API to do it. When using doubly-mapped circular buffers, the buffer size nevertheless needs to be at least | A potential problem with the implementation of the Packet Transmitter block is that as explained in [[gr4-packet-modem transmitter]], it uses <code>Pdu<T></code> objects (essentially a <code>std::vector<T></code>) to delimit packet boundaries because tag processing is too inefficient in GNU Radio 4.0. By default, the circular buffers connecting each block have 65336 elements. This buffer size could be changed, but at the moment there is not an API to do it. When using doubly-mapped circular buffers, the buffer size nevertheless needs to be at least equal to the page size, although there are other buffer implementations that would allow for very small buffers. The problem is that in practice the buffers connecting each block can be filled with up to 65536 packets. This would cause an extremely high latency, so no such <code>Pdu<T></code> buffer must ever be allowed to fill up. Therefore, most of the transmitter cannot rely on backpressure given by output buffer fullness (on the other hand, letting a buffer of 65536 "scalar" samples fill up is usually okay for latency, unless the sample rate is very slow). To try to improve latency even more, the input of each <code>Pdu<T></code> block in the transmitter is set to a <code>max_samples</code> of 1, in order to try to make the runtime call the block even when just one packet is available at its input. | ||

The latency management in the transmitter flowgraph shown above works in the following way. The Packet To Stream and Packet Counter blocks count the number of packets that pass through them. Each time that a packet passes through the block, a message with the updated count (a cumulative count of all the packets since the start of the flowgraph) is sent back to the TUN Source. The TUN Source keeps track of how many output packets it has produced so far. It has a parameter that sets the maximum number of packets that can be allowed to be inside the latency management zone, defined as the set of blocks between the TUN Source and the Packet To Stream or Packet Counter. The TUN Source uses its count of packets produced and the messages arriving from the Packet To Stream or Packet Counter in order to determine whether it is allowed to produce an output packet at this moment or not. | The latency management in the transmitter flowgraph shown above works in the following way. The Packet To Stream and Packet Counter blocks count the number of packets that pass through them. Each time that a packet passes through the block, a message with the updated count (a cumulative count of all the packets since the start of the flowgraph) is sent back to the TUN Source. The TUN Source keeps track of how many output packets it has produced so far. It has a parameter that sets the maximum number of packets that can be allowed to be inside the latency management zone, defined as the set of blocks between the TUN Source and the Packet To Stream or Packet Counter. The TUN Source uses its count of packets produced and the messages arriving from the Packet To Stream or Packet Counter in order to determine whether it is allowed to produce an output packet at this moment or not. | ||

Latest revision as of 10:49, 22 August 2024

This page describes the operation of a complete flowgraph that uses gr4-packet-modem and TUN Source/Sink blocks for IP communications. The Packet Transmitter and Packet Receiver blocks in this flowgraph are described in their respective pages gr4-packet-modem transmitter and gr4-packet-modem receiver.

A flowgraph similar to this is used in each of the applications included in gr4-packet-modem. Some applications use only the transmitter or the receiver part of the flowgraph, and some replace the SDR by a file or another block. See the README of the applications for more details.

Flowgraph

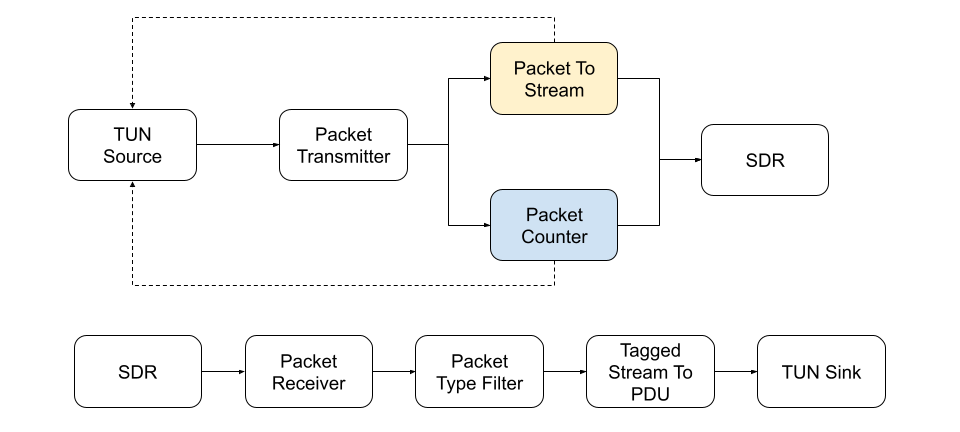

The flowgraph for the modem is shown below. Blocks in yellow are only present in burst mode, and blocks in blue are only present in stream mode.

In the transmitter section of the flowgraph, a TUN Source reads IP packets from a TUN network device. These IP packets are sent to the Packet Transmitter. The packets are marked with a tag that indicates that they are of type user data. When running in stream mode, the TUN Source also generates idle packets when there are no packets available in the the TUN device. Design note: ideally the insertion of idle packets would be placed on a block separated from TUN Source. However, due to the peculiarities of the GNU Radio 4.0 runtime, it is not possible to achieve good transmit latency if this is done, so all the functionality is integrated in the TUN Source as a work around. This is explained in more detail in the next section.

At the output of the Packet Transmitter there is either a Packet To Stream or a Packet Counter block depending on whether the transmitter is using burst or stream mode. The operation of these two blocks is described in the next section. In stream mode, the Packet To Stream block is used to convert between Pdu<std::complex<float>> packets which might be discontinuous in time (there can be time gap between each packet) to a continuous stream of std::complex<float> samples. It does so by inserting zeros in the output when no packet is available at the input, similarly to the GNU Radio 3.10 Burst to Stream block. In stream mode this conversion is not necessary, because the output is already a continuous stream of std::complex<float> samples.

The output IQ samples are sent to an SDR sink. At the moment such sink is not available in GNU Radio 4.0, so instead a File Sink and a FIFO are used to connect this flowgraph to a GNU Radio 3.10 flowgraph that contains the SDR sink.

In the transmitter section of the flowgraph, an SDR source introduces IQ samples into the flowgraph. Currently the only available SDR source in GNU Radio 4.0 is a Soapy Source block. These IQ samples are processed by the Packet Receiver, which only outputs packet payloads with correct CRC-32. A Packet Type Filter block is used to drop idle packets, according to a tag at the beginning of each packet that indicates the packet type (using information present in the packet header). The Tagged Stream To PDU converts from a stream of uint8_t items with packets delimited by "packet_len" tags to a stream of Pdu<uint8_t> packets, which is the required input format for TUN Sink. The TUN Sink writes all the received packets into a TUN network device.

Latency management

A well-known problem in GNU Radio transmitter flowgraphs is latency. The following example illustrates the problem. Consider a flowgraph that contains a source block that produces output always except when backpressured by output buffer fullness. The source is connected through a series of blocks to a sink. Such flowgraph will typically have a large latency between the source and the sink, especially if the sink consumes data at a low sample rate. The reason is that when this flowgraph reaches a steady state, the buffers in all the blocks of the flowgraph become full, so the latency is at least the number of samples in all those buffers divided by the sample rate. A common workaround is to introduce some feedback between the source and the sink so as to limit the number of samples that can be "in flight" between them and prevent all those buffers from ever filling up. This feedback mechanism informs the source of how many samples the sink has consumed so far (or an equivalent metric) so that the source can know when to stop producing output even if its output buffer is not full. Matt Ettus explained this in more detail in his GRCon19 presentation Managing Latency in Continuous GNU Radio Flowgraphs by Matt Ettus.

The same kind of latency management scheme is used in the gr4-packet-modem transmitter in order to have an acceptable latency between the TUN Source and the SDR sink. In addition, due to the particularities of the GNU Radio 4.0 runtime, some additional tricks need to be applied to control the latency. The transmitter flowgraph of gr4-packet-modem looks like the example flowgraph used above to illustrate the problem of transmit latency. Even when operating in burst mode, the TUN Source might want to produce output always. This is the case if there is an application trying to send data through the TUN interface "as fast as possible", which for instance happens in practice with a TCP transfer, which relies NACKs from the other end to throttle down the rate at which data is sent.

A potential problem with the implementation of the Packet Transmitter block is that as explained in gr4-packet-modem transmitter, it uses Pdu<T> objects (essentially a std::vector<T>) to delimit packet boundaries because tag processing is too inefficient in GNU Radio 4.0. By default, the circular buffers connecting each block have 65336 elements. This buffer size could be changed, but at the moment there is not an API to do it. When using doubly-mapped circular buffers, the buffer size nevertheless needs to be at least equal to the page size, although there are other buffer implementations that would allow for very small buffers. The problem is that in practice the buffers connecting each block can be filled with up to 65536 packets. This would cause an extremely high latency, so no such Pdu<T> buffer must ever be allowed to fill up. Therefore, most of the transmitter cannot rely on backpressure given by output buffer fullness (on the other hand, letting a buffer of 65536 "scalar" samples fill up is usually okay for latency, unless the sample rate is very slow). To try to improve latency even more, the input of each Pdu<T> block in the transmitter is set to a max_samples of 1, in order to try to make the runtime call the block even when just one packet is available at its input.

The latency management in the transmitter flowgraph shown above works in the following way. The Packet To Stream and Packet Counter blocks count the number of packets that pass through them. Each time that a packet passes through the block, a message with the updated count (a cumulative count of all the packets since the start of the flowgraph) is sent back to the TUN Source. The TUN Source keeps track of how many output packets it has produced so far. It has a parameter that sets the maximum number of packets that can be allowed to be inside the latency management zone, defined as the set of blocks between the TUN Source and the Packet To Stream or Packet Counter. The TUN Source uses its count of packets produced and the messages arriving from the Packet To Stream or Packet Counter in order to determine whether it is allowed to produce an output packet at this moment or not.

The TUN Source performs three functions that would be better placed in three separate blocks:

- Reading IP packets from a TUN device.

- Generating idle packets when no other packets are available and an output packet is requested.

- Controlling the number of packets that enter into the latency management region.

The problem with trying to separate these functions into different blocks is that the blocks need to be connected with buffers that are outside of the latency management region. As explained above, it is not possible to rely on buffer fill backpressure for the connections of these blocks. So it is quite difficult to separate these functions and still achieve good latency.