Linear Equalizer: Difference between revisions

(Created page with "Category:Digital Docs Performs linear equalization on a stream of complex samples. The Linear Equalizer block equalizes the incoming signal using an FIR filter. If prov...") |

No edit summary |

||

| Line 1: | Line 1: | ||

[[Category:Digital Docs]] | [[Category:Digital Docs]] | ||

[[Category:Block Docs]] | |||

Performs linear equalization on a stream of complex samples. | Performs linear equalization on a stream of complex samples. | ||

Revision as of 13:24, 30 March 2021

Performs linear equalization on a stream of complex samples.

The Linear Equalizer block equalizes the incoming signal using an FIR filter. If provided with a training sequence and a training start tag, data aided equalization will be performed starting with the tagged sample. If training-based equalization is active and the training sequence ends, then optionally decision directed equalization will be performed given the adapt_after_training If no training sequence or no tag is provided, decision directed equalization will be performed

This equalizer decimates to the symbol rate according to the samples per symbol parameter

Parameters

(R): Run-time adjustable

- Num Taps

- Number of taps for the FIR filter

- SPS

- Samples per Symbol of the input stream. The output will be downsampled to the symbol rate relative to this parameter

- Alg

- Adaptive algorithm object. This is the heart of the equalizer, it controls how the adaptive weights of the linear equalizer are updated

- Training Sequence

- Sequence of samples that will be used to train the equalizer. Provide empty vector to default to DD equalizer

- Adapt After Training

- Flag that when set true, continue DD training after training on specified training sequence

- Training Start Tag

- String to specify the start of the training sequence in the incoming data

Example Flowgraph

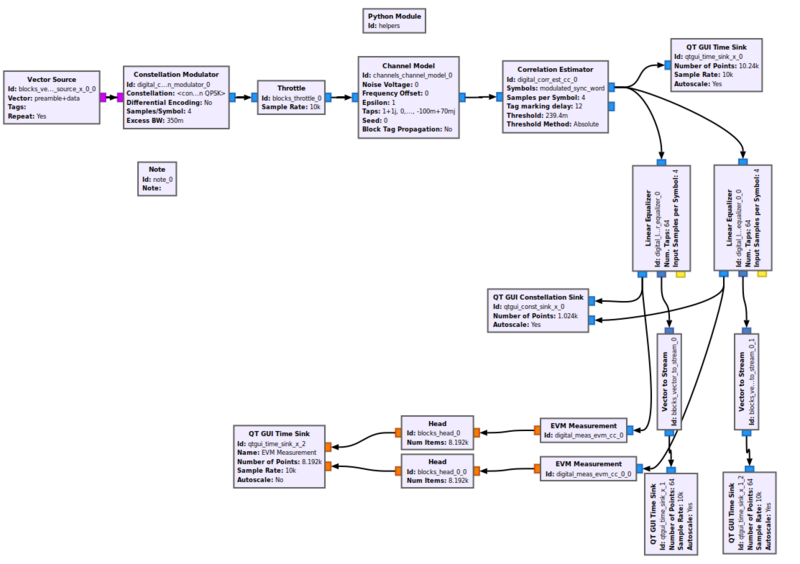

The included example, linear_equalizer_compare.grc shows the equalization of a modulated sequence passed through a linear channel.

First a sequence of a known preamble followed by random data are modulated using the Constellation Modulator, then passed through a Channel Model which simulates a multipath channel with AWGN. Next, a correlation estimator is used to find the preamble and tag the stream where it was found.

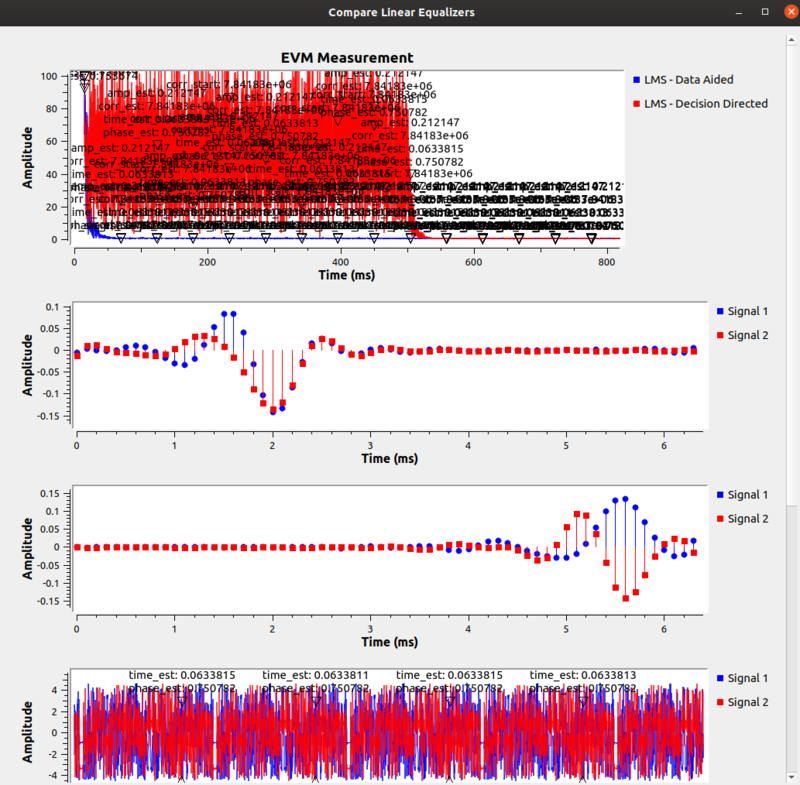

Two linear equalizer blocks are used to compare the performance of training using the known preamble versus entirely decision directed mode

We can see in the resulting graphs that the training sequence based equalizer converged more quickly